These aren't the packets I asked for

Since migrating a VM from qemu userspace networking to a dedicated IP and bridged networking I've been seeing abysmal throughput when downloading data from it. Instead of the usual 1.3MiB/s I get 30KiB/s tops. Taking a look with Wireshark a ton of retransmits of TCP segments appear. I decide to fire up mtr and check if there was anything note-worthy. Nada. I go on to complain about it on IRC and promptly get a good hint: large packets. I go ahead and try with ping, sending large ICMP packets and UDP datagrams with the Don't Fragment flag to my home connection. Unforunately, there is nothing out of the ordinary to see. An MTU of 1500 is too large but that was expected, since I'm on a DSL connection that's wrapped in PPPoE. Fine, a MTU of 1492 it is. But this has nothing to do with the actual problem after all. Next, I write up a quick python script running a TCP server and client respectively on each end:

#!/usr/bin/env python3

import socket

s = socket.socket()

s.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

s.bind(('0.0.0.0', 58728))

s.listen()

print("Waiting for connection")

try:

c, *rest = s.accept()

input("Press Return to send")

c.send(b'X'*(1500-8-20-20))

input("Press Return to exit")

finally:

s.close()

#!/usr/bin/env python3

import socket

s = socket.socket()

s.connect(('server.example.com', 58728))

s.recv(4096)

input("Press Return to exit")

I calculate the maximum payload I should be able to get through, accounting for a 8-byte PPPoE header from my DSL connection, a 20-byte IP header and a 20-byte TCP header. This leaves me with 1452 bytes of payload. I fire up the script on the server and the client locally, start Wireshark on both ends (for the server one using ssh server.example.com tcpdump -i ens3 -U -n -s 0 -w - 'tcp port 58728' | wireshark-gtk -k -i -), and let the script do its thing.

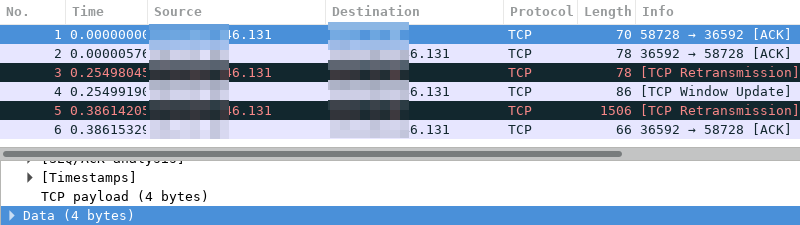

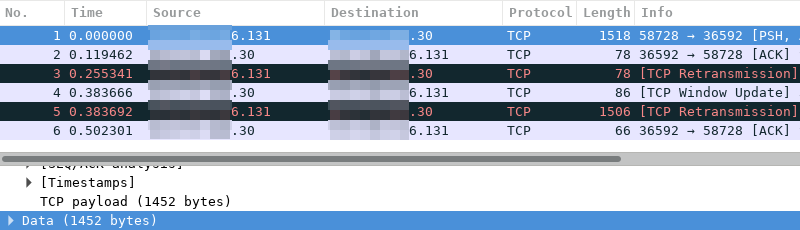

Instead of the expected TCP segment with 1452 bytes of payload that I see in the server's Wireshark instance, there's one with only 4 bytes of data in the local instance! Followed by two retransmissions with each 12 and 1440 bytes of payload respectively. Looking at the TCP header that split made sense afterwards. It was 32 bytes instead of the expected 20 due to an options part of the header being present unexpectedly. After lowering the payload by another 12 bytes and everything is fine.

But anyway, what's with those 4 bytes?! Some router must be rewriting my TCP segments! Time to find the culprit by asking people to check if they experience the same problem and compare routes. I quickly found someone with a similar route from the server to them who was not having any problems. First from the server's network through Cogent it was the same (hop 5), and then off to DTAG for me and to Telia for them. For me, there was just two more hops, so one of them must be rewriting my TCP! It's probably not hop 7 because that's the router everything goes through and I have no problems elsewhere, so hop 6 it is!

My traceroute [vUNKNOWN]

server.example.com 2018-02-19T22:16:06+0000

Keys: Help Display mode Restart statistics Order of fields quit

Packets Pings

Host Loss% Snt Last Avg Best Wrst StDev

…

5. be3363.ccr31.jfk04.atlas.cogentco.com 0.0% 8 4.1 3.9 3.7 4.1 0.2

6. 62.157.250.149 0.0% 8 7.3 8.0 7.2 9.1 0.7

7. 91.23.208.125 0.0% 8 99.3 99.3 99.2 99.7 0.2

8. *********.dip0.t-ipconnect.de 0.0% 8 123.5 123.4 116.8 132.7 5.8

I could write a tool that establishes a TCP connection and then sends large segments on it, with a low IP TTL, basically the same as trace route. The ICMP Timeout exceeded response would contain the original packet's IP header which I could use to see if the packet was rewritten yet or not. But this would be a lot of work; the normal TCP socket interface doesn't give you access to TTL (I think), and I'd need to pull some stunts to get the ICMP responses… But there's an easier way! Just ask on IRC if anyone knows a tool that does that already (since DuckDuckGo and Google had failed me).

A few minutes later I find myself in ##networking on Freenode. I mention the weird TCP segment and ask for tooling. After some checking with tracepath which doesn't yield any revealing results, I get asked about samples. Fine with me; upload .pcaps from both machines. By now my head is already smoking. Then someone replies that something is strange: the server sent an ethernet frame with 1512 bytes of payload. This shouldn't be happening, ip link show ens3 shows that the MTU is 1500, not 1512. ##networking throws the words "buggy TCP/IP stack" into the room. I try lowering the MTU a bit, no dice. I lower it a whole bunch, still no dice. Wireshark still shows packets having the same size as before. Then I notice something even stranger: there's packets with 2900 bytes of payload! In response comes the suggestion that the NIC or driver might be buggy with regard to offloads. Checking ethtool -k ens3 I see tx-tcp-segmentation: on. I turn it off using ethtool -K ens3 tx-tcp-segmentation off and there we go! Everything is fine now!

Investigating some more after turning TCP segmentation offload back on, I see that the large packets are sent with the Dont' Fragment flag. Capturing ICMP messages with Wireshark I can see that hop 7 responds with a ICMP Fragmentation Required message. Now everything is clear: those large packets don't fit down my small DSL pipe, and the contact that cross-checked the problem for me earlier has fiber and thus didn't have any issue! It seems that the Realtek NIC in the server does not fragment the TCP segments correctly and dropping most of the data. That still does not explain why only 4 bytes made it through and not 12 or 1440. The parts regarding the NIC are still speculation though and would need to be confirmed by sniffing what actually goes over the wire next to the server.

Lessons learned:

- Don't be too quick to blame routers on the internet, they know what they're doing! (probably)

- Don't trust your network card blindly!

Update:

Creating a systemd.link(5) configuration file for the network card (/etc/systemd/network/ens3.link in this case) and adding TCPSegmentationOffload=off in the [Link] section makes the fix permanent when using systemd-networkd.